Instagram announced a significant update on Tuesday to enhance privacy and mitigate the intrusive effects of social media for users under 18, responding to growing concerns about children's online safety.

The primary update is the introduction of "Teen Accounts," designed to safeguard users aged 13 to 17 from mental health challenges often associated with social media use.

In the coming weeks, accounts of users under 18 will automatically be set to private, meaning only approved followers can view their posts. Additionally, Instagram, owned by Meta, will halt notifications for minors between 10 p.m. and 7 a.m. to encourage better sleep habits. The platform is also introducing more parental supervision tools, including a feature that allows parents to view the accounts their teenager has recently messaged.

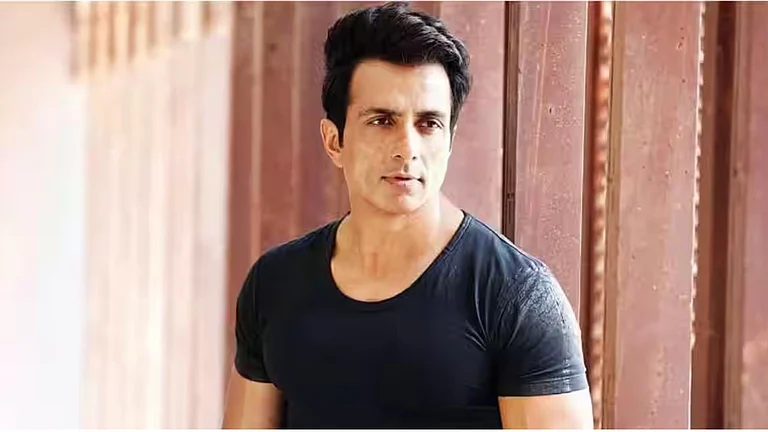

Adam Mosseri, Instagram's head, explained that these changes aim to address parents' key worries, such as inappropriate contact, harmful content, and excessive screen time.

“We decided to focus on what parents think because they know better what’s appropriate for their children than any tech company, any private company, any senator or policymaker or staffer or regulator,” Adam Mosseri stated in an interview. Instagram's new initiative, called “Teen Accounts,” aims to automatically place minors into age-appropriate experiences on the app, he added.

The new account settings will be implemented for users in the U.S., U.K., Australia, and Canada.

Key Features Of "Teen Accounts"

Default Private Accounts

Accounts for users aged 13 to 17 will be set to private by default, preventing strangers from easily viewing or interacting with their profiles.

Sensitive Content Restrictions

Teens will have limited and reduced exposure to harmful or inappropriate content.

Restrictions On Messaging

Direct messages (DMs) will be tightly controlled, allowing teens to receive messages only from people they follow, reducing unwanted or harmful contact.

Reminders For Time Limit

Teens will receive prompts encouraging them to set limits on their screen time, promoting healthier app usage.

Limited Interactions

Teens' interactions with adult accounts that don’t follow them back will be restricted, lowering the risk of unsolicited messages or predatory behavior.

Age Verification

Instagram will implement new age verification technology. In partnership with Yoti, Instagram will enable teens to verify their age through a video selfie. Teens can also ask mutual friends to verify their age if necessary.

Parental Supervision

Meta is also introducing a 'Parents’ Supervision Feature,' giving parents more oversight of their teens' Instagram activity:

Parents can block access to the app during certain hours to regulate screen time.

They can monitor the topics their teen views and who they chat with.

Parents can set daily screen time limits.

In recent years, parents and advocacy groups have warned that platforms like Instagram, TikTok, and Snapchat frequently expose minors to bullying, predators, sexual extortion, and harmful content, including self-harm and eating disorders.

These updates represent one of the most extensive efforts by a social media platform to address teenagers’ online experiences, as concerns over youth safety have intensified.

In June, U.S. Surgeon General Dr. Vivek Murthy advocated for cigarette-like warnings on social media to highlight potential mental health risks. In July, the Senate passed the bipartisan Kids Online Safety Act, aimed at enforcing safety and privacy regulations for children and teens on social media. Some states have also introduced social media restrictions.

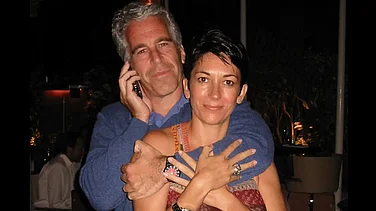

Dozens of state attorneys general have sued Meta, accusing the company — which also owns Facebook and WhatsApp — of intentionally addicting children to its platforms while downplaying the associated risks. Meta CEO Mark Zuckerberg has faced significant criticism for the dangers social media poses to young users. During a Congressional hearing on child online safety in January, lawmakers urged Zuckerberg to apologize to families whose children had died by suicide following social media abuse.

“I’m sorry for everything you have all been through,” Zuckerberg said to the families at the hearing.

The effectiveness of Instagram’s new changes remains uncertain. Meta has been pledging to protect minors from inappropriate content and contact since at least 2007, when state attorneys general raised concerns about Facebook being filled with sexually explicit material and allowing adults to solicit teenagers. Since then, Meta has introduced various tools, features, and settings aimed at improving youth safety on its platforms, though with mixed results.