Madhya Pradesh Police on Thursday received a 'self harm' alert from social networking app Instagram, leading to the prevention of a potential suicide.

The 17-year-old girl, who was allegedly considering suicide, was counselled by the Police after she was traced following the Instagram alert.

The Times of India reported that the Indore unit of cyber crime wing received a 'self harm' alert from Instagram. They passed it on to their headquarters in the MP capital Bhopal. The IP address of the user was traced to be in Satna district and local police personnel were alerted.

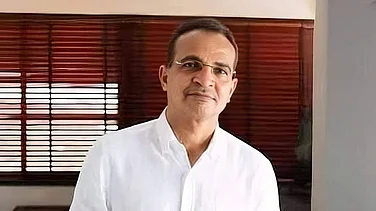

"We located the girl and called her over, along with mother. She told us that she only wanted to scare her boyfriend as he was not talking to her for five days. She was sent home after a session of counseling," said Satna Superintendent of Police Ashutosh Gupta to ToI.

ASP cyber cell HQ Vaibhav Srivastava told ToI that the girl had been posting pictures that seemingly showed her preparing to end her life, such as a small cut on her wrist, and this was flagged on Instagram.

"This is the second time that we received a 'suicide' alert from Instagram. The last time, the girl was traced to Chhattisgarh. We informed the local police and saved her," added Srivastava.

Instagram has a policy of not allowing suicide- or self-harm related content on its platform. In an update in February 2019, Instagram said it would not allow any imagery of suicide or self-harm, such as cutting oneself.

"We will not allow any graphic images of self-harm, such as cutting on Instagram – even if it would previously have been allowed as admission. We have never allowed posts that promote or encourage suicide or self harm, and will continue to remove it when reported," said Instagram at the time.

The BBC in 2020 reported that Instagram has provisions of alerting emergency services if they feel the need.

"Posts identified as harmful by the algorithm can be referred to human moderators, who choose whether to take further action - including directing the user to help organisations and informing emergency services," reported BBC, adding that such provisions at the time existed only outside Europe.